OK, so you figured out you need to conduct a survey. What’s next?

Everyone’s gut reaction is, let’s start writing questions. But if you want to do it right this is exactly what you should not do. In fact, resist all urges to even think about what questions to ask. To write a better survey, the first question you need to answer is:

1. what am I interested in finding out?

It is very important that you do not phrase the answer to this in a survey question. You never really cared how people respond to any question anyway, right? You care about what is behind those responses. Let’s say, you want to know if a customer likes a product. Maybe you want to know if they are likely to refer you, as a service provider, to others. Ask yourself, what are the relevant demographic information needed for your study. Make an exhaustive list of what you want to find out.

Now you write questions? No! You still should not. Rather, ask yourself…

2. what will you do with this information?

You have to think about this question in two different ways, two different sequential steps.

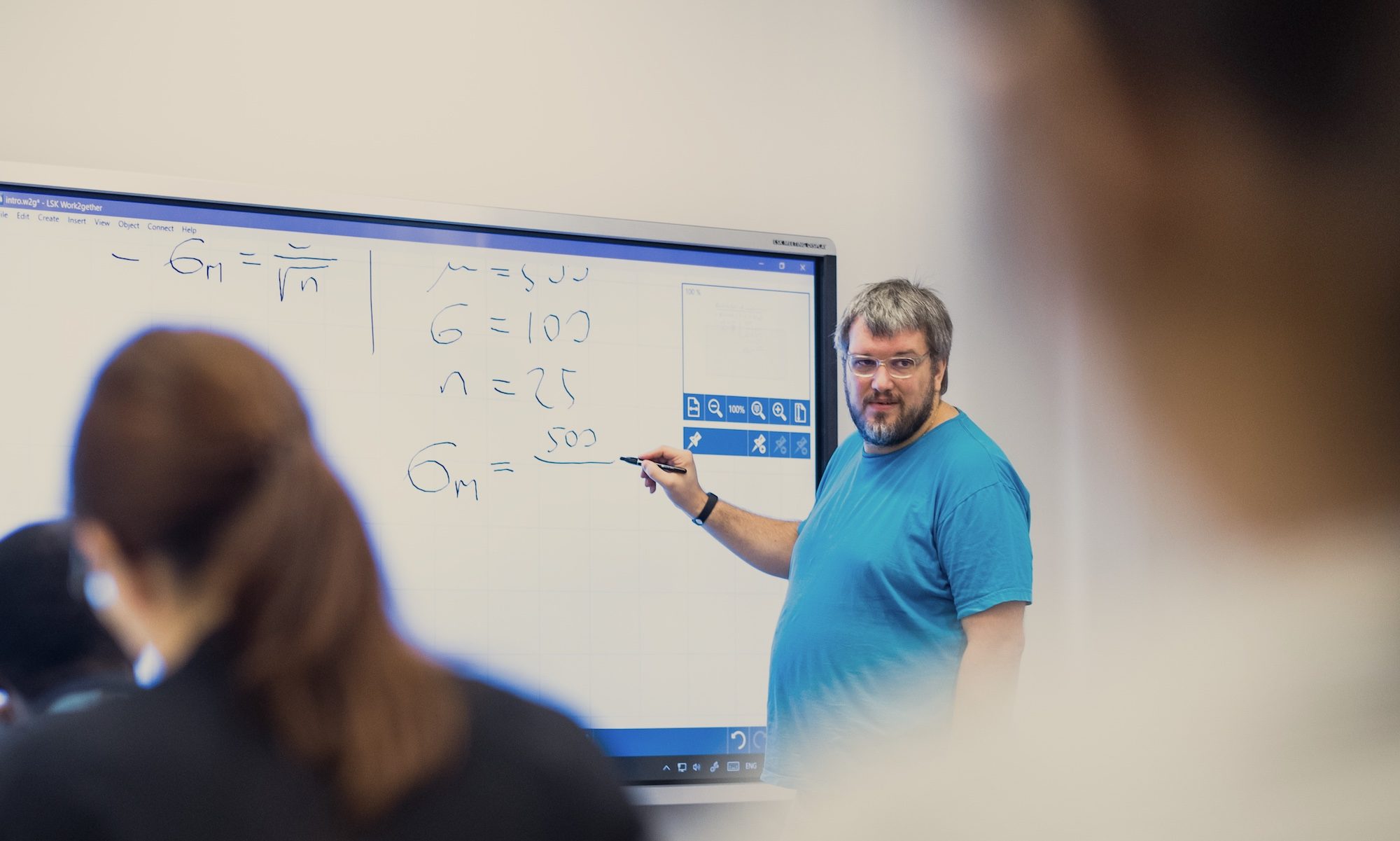

a. How will I analyze this data. Develop an analytical strategy. Will I look at (or present) a histogram? Do I want to see any association between two of the constructs defined in point 1? Are older or younger people more likely to refer my services to others? Men or women? Compile an exhaustive list of such questions you have, that you want to get out of the data. Once you asked your questions, come up with an analytical strategy. Do I just want some descriptive information (like a cross-tabulation) all the way to needing to develop an instrumental variable regression model to causally ascertain the relationship between a key independent and the dependent variable? For the latter you may also need to find three or four plausible instruments. Have an analytical strategy in mind. It could be as simple as calculating the mean, it could be a simple inferential statistic like a correlation or a two sample t-test or it could be something complex. Just make sure you have a preliminary analytical strategy.

b. Know what you will do with the information. Devise action strategies. If I see that young people do not refer my service to friends, I will develop a marketing strategy that will nudge young people to do this. Maybe you are not the person taking action on the survey, then devise a recommendation strategy. If you work for a client (even if it is in-house) push them hard on devising this strategy before you start the survey. The better they know what they want to do with the data, the better chance you have a writing a useful survey for them.

To aid steps 1 and 2 (which you may need to go back and forth on a bit) it is a good idea to draw things out. It is OK to go back and forth between your current and previous steps, but don’t go beyond that.

3. Take the constructs you identified as crucial and figure out how best to operationalize them. Chances are, this is the stage where the constructs (if you are following these steps closely, that are drawn up in a web of relationships of interests) are going to start turning into survey questions. It is OK to ask multiple survey questions trying to tap one construct. Beware (and if need be, modify) the analytical strategy developed in step 2 as you start operationalizing. Maybe you thought you will look at a correlation, but it turns out a simple yes-no question is the best way to ask about something. Then you will only have a dichotomy and not a continuous variable as called for by a correlation. So, you may need to adjust your analytical strategy. At this stage, don’t go back to step 1 anymore.

Follow conventional rules of questionnaire design. Make sure you are asking questions (not just throwing words at the respondent. “Gender: ” should be “What is your gender”? The survey process is a conversation. Don’t break the basic conversational rules. Make sure the response categories you offer are unique, mutually exclusive and they answer the question you ask. Label all your response categories and no need to throw numbers you will use in the analysis at people. Unless you are some survey researcher or quantitative social scientist (which you probably are, or slowly becoming if you got this far), it is wholly unnatural to map a conversation on to some numeric space, so don’t make people do it. Also, don’t even bother them with your numeric mapping. And – very important – make sure the response categories actually answer the question. If the question starts with “how many”, the answer is never “strongly agree” or “disagree”.

Remember that bipolar scales should be no wider than 7 points (11 for experts – but good luck labeling all of them…) and unipolar scales no wider than 5 points (7 for experts). Don’t let your respondent just run through tables of questions with the same responses. They will lose attention. Better if you write question specific response categories.

Write at a grade level that is around 3-5 years lower than the lower end of your population. Don’t use big words, homonyms, heteronyms or jargon that may not be understood by the respondent. Ask one question at a time (the words and + or are usually red flags in survey questions). You can offer a don’t know option, just remember, it encourages people to not engage with the survey, not to think about the survey. (And men, on average are less likely to admit not knowing anything anyway, so even if your goal is to find out of people don’t know something, just know that your results will be biased no matter what, so why bother.) This is hardly an exhaustive list. But there are a million more pointers and also great survey question writing tutorials online. Read through a few. What I see less of is tutorials demonstrating this broader process that looks beyond the question writing and, IMHO, is absolutely necessary to acquire quality responses that you can effectively use.

Finally, please remember that most people hate surveys. This process ensures that you only ask what is necessary and what you know you need and know what to do with. The longer a survey is, the worse the data quality will be. Off the bat, fewer people will take a survey that seems long to them (and it is a good idea to tell people anyway how long the survey will take to ensure they have enough time to do it when they do end up taking it – some people may never take a survey as they don’t know if they will have time to do so, unless you give them a ballpark estimate of how long). People’s attention spans are more and more limited in today’s day and age. After 10 minutes, you can forget about them paying much attention which will be at the expense of data quality. This process ensures no unnecessary questions are asked.

When you start a survey by writing questions, you become fond of those questions and more likely to ask them (or hesitate on cutting them later). This is why it is especially important to first know what research question you need answered and only then, start designing the survey questions that will help you do it.

Of course, there will always be that stakeholder who comes and says, we also should ask question XYZ… and sometimes they have good ideas with obvious implications. But most of the time, this is not the case. The best weapon against such a proposal is the demonstration of the thoughtful development as described above. You come back to them demonstrating how and why all questions are in this survey and asking them, now with this in mind, why do you want to ask that too? They will either improve your design on the spot or back down. Either way, it is a win-win.